You do not want to give Jensen Huang a 16-year head start

Nvidia's incredible moat and how it's like AWS in the early days

AWS’ 7-year head start

In a 2018 interview with David Rubenstein from the Carlyle Group (YouTube), Jeff Bezos explained how Amazon got a seven-year head start in a business that would end up being worth hundreds of billions of dollars:

“AWS completely reinvented the way companies buy computation. Then a business miracle happened. This never happens. This is the greatest piece of business luck in the history of business as far as I know. We faced no like-minded competition for seven years. It’s unbelievable. When you pioneer if you’re lucky you get a two year head start. Nobody gets a seven year head start. We had this incredible runway.”

Now, you can almost see Warren Buffett nodding along. In 2017, Warren Buffett commented, “You do not want to give Jeff Bezos a seven-year head start.” No, you do not.

But—and I cannot stress this enough—you really do not want to give Jensen Huang a 16-year head start.

Nvidia’s 16-year head start with AI

In 2007, Nvidia introduced CUDA, a parallel computing platform and API for GPUs. Now, this might sound like technical gobbledygook, but what it really means is that Nvidia built a way for people to use its graphics cards for something other than video games. This was an important moment. It was 2007, and nobody was really thinking about GPU computing for general tasks like AI or scientific simulations. But Nvidia was. And it turns out that this move, which seemed niche at the time, was a masterstroke.

CUDA gave Nvidia a multi-year head start over alternatives like OpenCL, which, to be honest, didn’t even show up until 2009. And even then, it was like, “Okay, but CUDA is still better.” Nvidia was playing chess, everyone else was playing checkers. Here’s why that matters:

Maturity and optimization: Nvidia has had over a decade to optimize CUDA. That’s 10+ years of tweaking, tuning, and making sure its GPUs work seamlessly with its software. The result? Blazing fast performance. CUDA software basically turns GPUs from single-purpose chips into general-purpose computing beasts, capable of taking on advanced tasks like physics simulations and chemical modeling. It’s not just hardware; it’s a whole system that’s been fine-tuned to outperform.

Tooling and ecosystem: CUDA isn’t just some platform and API. Nvidia has built a whole ecosystem around it with debuggers, profilers, libraries, and documentation. If you want to do machine learning, it’s not just that CUDA is an option—it’s the option. The tooling around CUDA, combined with Nvidia’s hardware, makes it the go-to for AI workloads.

Performance: Look, when you’re talking about AI and high-performance computing, you’re talking about Nvidia. The integration between CUDA and Nvidia GPUs results in real-world performance that’s hard to match. It’s not just about theoretical benchmarks; it’s about actual results in the field.

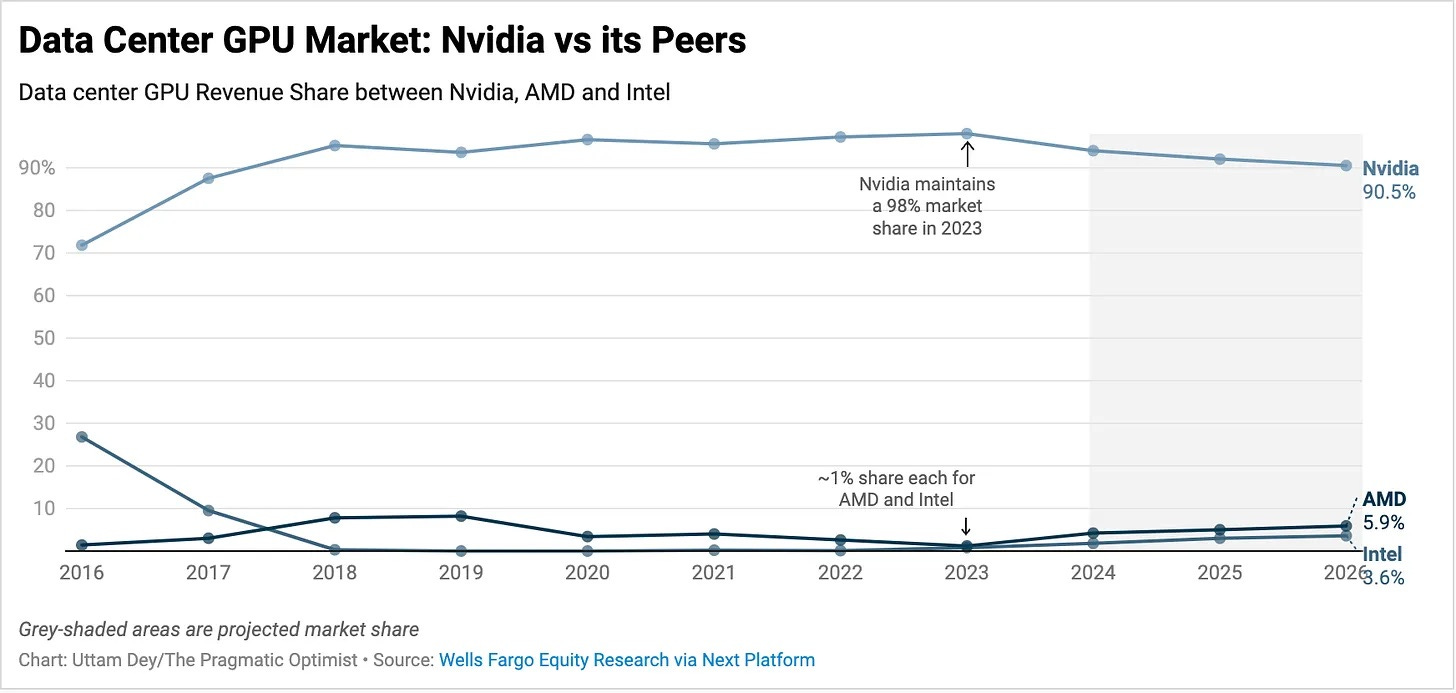

And here’s the kicker: Nvidia’s biggest threats—Intel and AMD—are still years away from putting any real pressure on them. Years! While Intel and AMD are busy trying to catch up, Nvidia is off building the future.

Developer mindshare: At this point, CUDA is practically synonymous with GPU programming. Go on Stack Overflow, and you’ll find way more expertise in CUDA than OpenCL. Developers aren’t just using CUDA; they’re learning it, mastering it, and building their careers around it. As analyst Daniel Newman put it, “Customers will wait 18 months to buy an Nvidia system rather than buy an available, off-the-shelf chip from either a startup or another competitor.” That’s loyalty. That’s a moat.

Business strategy: Nvidia has a strong incentive to keep improving CUDA because every improvement means more developers locked into their ecosystem, which means more GPUs sold. OpenCL, as a vendor-neutral API, just doesn’t have that same strategic advantage. Nvidia’s business model is basically: “Build the best tools, sell the most GPUs.” It’s working.

“No one even comes close”

One of Nvidia’s most prominent customers is Mustafa Suleyman, co-founder of DeepMind and now co-founder of Inflection AI1. His company recently raised $1.3 billion at a $4 billion valuation. And what does Suleyman say about Nvidia? He says there’s no obligation to use Nvidia’s products, but also, “None of them come close” to Nvidia’s offerings. If you’re in AI, you’re using CUDA.

And this is what happens when you give Nvidia a 16-year head start. CUDA was a bet Nvidia made early, and that bet is paying off in a big way. Even as competitors try to catch up, CUDA remains the gold standard for GPU-accelerated software. It’s not just about the chips; it’s about the entire software ecosystem Nvidia has built around those chips.

Nvidia's competitors can't “compete with a company that sells computers, software, cloud services and trained A.I. models, as well as processors.”

Patrick Moorhead, CEO of Moor Insights and Strategy, says it best: “The hardest part of the equation is software, a moat Nvidia has built with CUDA and its libraries and workflows.” Nvidia isn’t just selling chips; it’s selling the future of computing. And no one else is even close.

How Nvidia’s moat with AI is like AWS

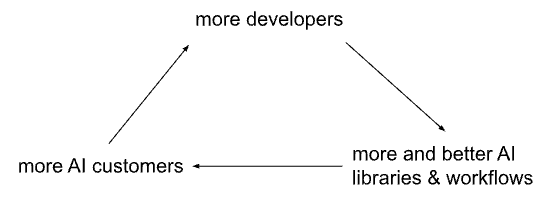

So, how does this all tie back to AWS? Well, Nvidia’s advantage today is a lot like the advantage AWS had in the early days of cloud computing. AWS got a head start, built out its infrastructure, and became the default option for developers. The more developers used AWS, the better AWS got, which attracted more developers, which made AWS even better. It’s a flywheel.

Nvidia today has the same advantage AWS has had in cloud computing for years because it's benefited from the same flywheel:

The more developers choose Nvidia, the more and the better CUDA libraries and workflows are for working with GPUs, the more companies will base their generative AI strategies around using Nvidia, and the more other developers will choose to learn CUDA over other frameworks.

Just as developers built libraries, workflows, and expertise around using AWS, they have done the same with CUDA over the past decade.

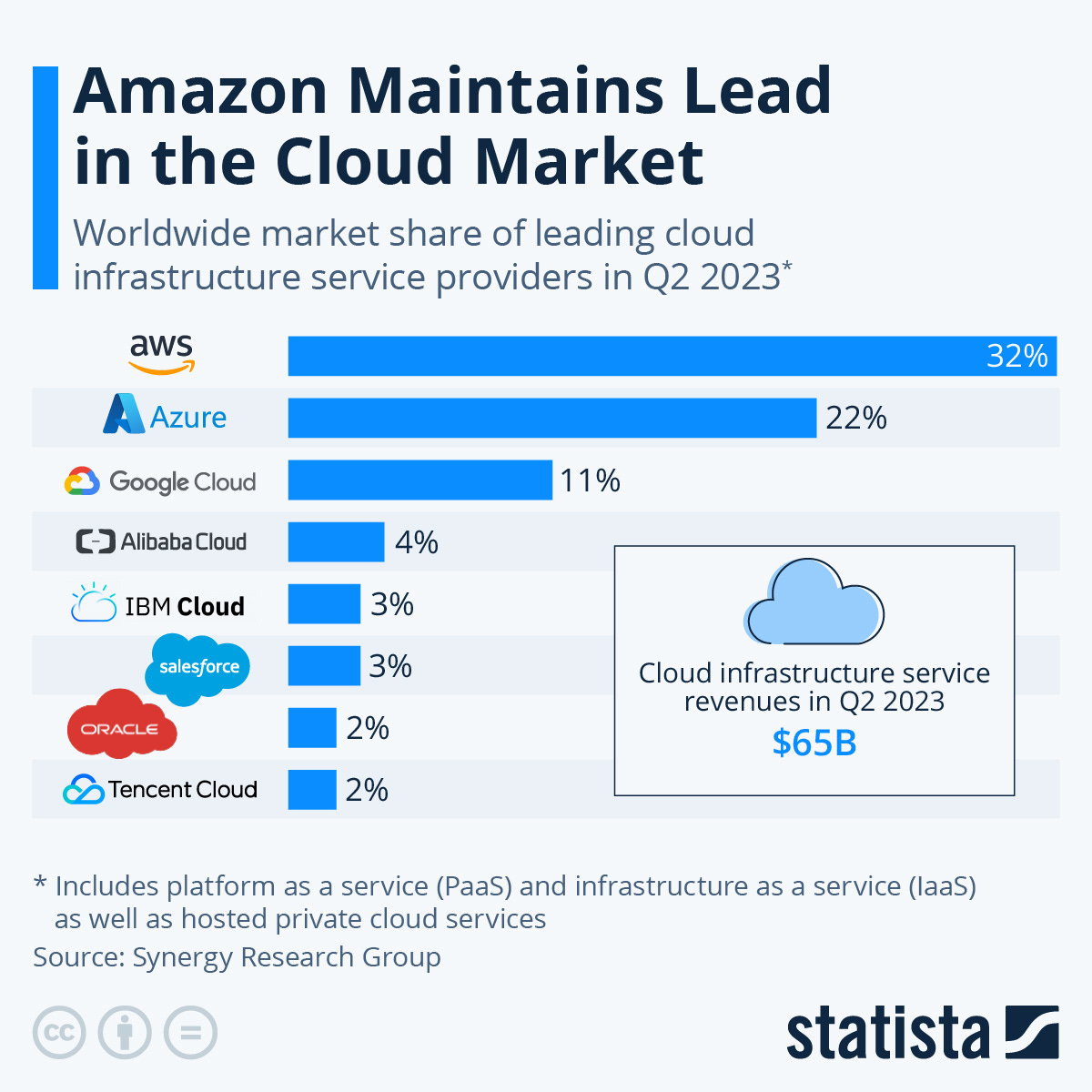

If anything, Nvidia’s moat is even deeper than AWS’s. Microsoft and Google have chipped away at AWS’s lead in the cloud.

But in AI? Nvidia is the undisputed leader, and it’s going to be a while before anyone catches up.

Suffice it to say, you do not want to give Jensen Huang a 16-year head start on building the critical tools for the most important industry of our generation.

This Substack Will Always Be Free to Read

If you liked this post, please subscribe and pledge to subscribe. (It's the lowest prospective price Substack allows: $5/month, $50/year, $50.01/year founding member.)

We'll never charge readers a penny; feel free to input a credit card that expires tomorrow. Pledges will help us with the Substack algorithm. Thank you.

Subscribe for lessons from an experienced investor who's made millions from the market and zero stock tips, other than buy the S&P 500.

About

Inverteum is a proprietary trading firm that specializes in long-short algorithmic strategies to generate returns in both bull and bear markets.

More from Inverteum

Inflection AI was acquired by Microsoft in March 2024